[This is a draft of a section of a forthcoming study on “A Flexible Governance Framework for Artificial Intelligence,” which I hope to complete shortly. I welcome feedback. I have also cross-posted this essay at Medium.]

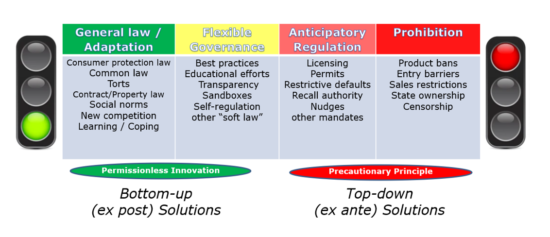

Debates about how to embed ethics and best practices into AI product design is where the question of public policy defaults becomes important. To the extent AI design becomes the subject of legal or regulatory decision-making, a choice must be made between two general approaches: the precautionary principle or the proactionary principle.[1] While there are many hybrid governance approaches in between these two poles, the crucial issue is whether the initial legal default for AI technologies will be set closer to the red light of the precautionary principle (i.e., permissioned innovation) or to the green light of the proactionary principle (i.e., (permissionless innovation). Each governance default will be discussed.

The Problem with the Precautionary Principle as the Policy Default for AI

The precautionary principle holds that innovations are to be curtailed or potentially even disallowed until the creators of those new technologies can prove that they will not cause any theoretical harms. The classic formulation of the precautionary principle can be found in the “Wingspan Statement,” which was formulated at an academic conference that took place at the Wingspread Conference Center in Wisconsin in 1998. It read: “Where an activity raises threats of harm to the environment or human health, precautionary measures should be taken even if some cause and effect relationships are not fully established scientifically.”[2] There have been many reformulations of the precautionary principle over time but, as legal scholar Cass Sunstein has noted, “in all of them, the animating idea is that regulators should take steps to protect against potential harms, even if causal chains are unclear and even if we do not know that those harms will come to fruition.”[3] Put simply, under almost all varieties of the precautionary principle, innovation is treated as “guilty until proven innocent.”[4] We can also think of this as permissioned innovation.

The logic animating the precautionary principle reflects a well-intentioned desire to play it safe in the face of uncertainty. The problem lies in the way this instinct gets translated into law and regulation. Making the precautionary principle the public policy default for any given technology or sector has a strong bearing on how much innovation we can expect to flow from it. When trial-and-error experimentation is preemptively forbidden or discouraged by law, it can limit many of the positive outcomes that typically accompany efforts by people to be creative and entrepreneurial. This can, in turn, give rise to different risks for society in terms of forgone innovation, growth, and corresponding opportunities to improve human welfare in meaningful ways.

St. Thomas Aquinas once observed that if the sole goal of a captain were to preserve their ship, the captain would keep it in port forever. But that clearly is not the captain’s highest goal. Aquinas was making a simple but powerful point: There can be no reward without some effort and even some risk-taking. Ship captains brave the high seas because they are in search of a greater good, such as recognition, adventure, or income. Keeping ships in port forever would preserve their vessels, but at what cost?

Similarly, consider the wise words of Wilbur Wright, who pioneered human flight. Few people better understood the profound risks associated with entrepreneurial activities. After all, Wilbur and his brother were trying to figure out how to literally lift humans off the Earth. The dangers were real, but worth taking. “If you are looking for perfect safety,” Wright said, “you would do well to sit on a fence and watch the birds.” Humans would have never taken to the skies if the Wright brothers had not gotten off the fence and taken the risks they did. Risk-taking drives innovation and, over the long-haul, improves our well-being.[5] Nothing ventured, nothing gained.

These lessons can be applied to public policy by considering what would happen if, in the name of safety, public officials told captains to never leave port or told aspiring pilots to never leave the ground. The opportunity cost of inaction can be hard to quantify, but it should be clear that if we organized our entire society around a rigid application of the precautionary principle, progress and prosperity would suffer.

Heavy-handed preemptive restraints on creative acts can have deleterious effects because they raise barriers to entry, increase compliance costs, and create more risk and uncertainty for entrepreneurs and investors. Thus, it is the unseen costs—primarily in the form of forgone innovation opportunities—that makes the precautionary principle so problematic as a policy default. This is why scientist Martin Rees speaks of “the hidden cost of saying no” that is associated with the precautionary principle.[6]

The precise way the precautionary principle leads to this result is that it derails the so-called learning curve by limiting opportunities to learn from trial-and-error experimentation with new and better ways of doing things.[7] The learning curve refers to the way that individuals, organizations, or industries are able to learn from their mistakes, improve their designs, enhance productivity, lower costs, and then offer superior products based on the resulting knowledge.[8] In his recent book, Where Is My Flying Car?, J. Storrs Hall documents how, over the last half century, “regulation clobbered the learning curve” for many important technologies in the U.S., especially nuclear, nanotech, and advanced aviation.[9] Hall shows how society was denied many important innovations due to endless foot-dragging or outright opposition to change from special interests, anti-innovation activists, and over-zealous bureaucrats.

In many cases, innovators don’t even know what they are up against because, as many scholars have noted, “the precautionary principle, in all of its forms, is fraught with vagueness and ambiguity.”[10] It creates confusion and fear about the wisdom of taking action in the face of uncertainty. Worst case thinking paralyzes regulators who aim to “play it safe” at all costs. The result is an endless snafu of red tape as layer upon layer of mandates build up and block progress. The result is what many scholars now decry as a culture of “vetocracy,” which describes the many veto points within modern political systems that hold back innovation, development and economic opportunity.[11] This endless accumulation of potential veto points in the policy process in the form of mandates and restrictions can greatly curtail innovation opportunities. “Like sediment in a harbor, law has steadily accumulated, mainly since the 1960s, until most productive activity requires slogging through a legal swamp,” says Philip K. Howard, chair of Common Good.[12] “Too much law,” he argues, “can have similar effects as too little law,” because:

People slow down, they become defensive, they don’t initiate projects because they are surrounded by legal risks and bureaucratic hurdles. They tiptoe through the day looking over their shoulders rather than driving forward on the power of their instincts. Instead of trial and error, they focus on avoiding error.[13]

This is exactly why it is important that policymakers not get too caught up in attempts to preemptively resolve every potential hypothetical worst case scenarios associated with AI technologies. The problem with that approach was succinctly summarized by the political scientist Aaron Wildavsky when he noted, “If you can do nothing without knowing first how it will turn out, you cannot do anything at all.”[14] Or, as I have stated in a book on this topic, “living in constant fear of worst-case scenarios—and premising public policy on them—means that best-case scenarios will never come about.”[15]

This does not mean society should dismiss all concerns about the risks surrounding AI. Some technological risks do necessitate a degree of precautionary policy, but proportionality is crucial, notes Gabrielle Bauer, a Toronto-based medical writer. “Used too liberally,” she argues, “the precautionary principle can keep us stuck in a state of extreme risk-aversion, leading to cumbersome policies that weigh down our lives. To get to the good parts of life, we need to accept some risk.”[16] It is not enough to simply hypothesize that certain AI innovations might entail some risk. The critics need to prove it using risk analysis techniques that properly weigh both the potential costs and benefits.[17] Moreover, when conducting such analyses, the full range of trade-offs associated with preemptive regulation must be evaluated. Again, where precautionary constraints might deny society life-enriching devices or services, those costs must be acknowledged.

Generally speaking, the most extreme precautionary controls should only be imposed when the potential harms in question are highly probable, tangible, immediate, irreversible, catastrophic, or directly threatening to life and limb in some fashion.[18] In the context of AI and ML systems, it may be the case that such a test is satisfied already for law enforcement use of certain algorithmic profiling techniques. And that test is satisfied for so-called “killer robots,” or autonomous military technology.[19] These are often described as “existential risks.” The precautionary principle is the right default in these cases because it is abundantly clear how unrestricted use would have catastrophic consequences. For similar reasons, governments have long imposed comprehensive restrictions on certain types of weapons.[20] And although nuclear and chemical technologies have many important applications, their use must also be limited to some degree even outside of militaristic applications because they can pose grave danger if misused.

But the vast majority of AI-enabled technologies are not like this. Most innovations should not be treated the same a hand grenade or a ticking time bomb. In reality, most algorithmic failures will be more mundane and difficult to foresee in advance. By their very nature, algorithms are constantly evolving because programs and systems are being endlessly tweaked by designers to improve them. In his books on the evolution of engineering and systems design, Henry Petroski has noted that “the shortcomings of things are what drive their evolution.”[21] The normal state of things is “ubiquitous imperfection,” he notes, and it is precisely that reality that drives efforts to continuously innovate and iterate.[22]

Regulations rooted in the precautionary principle hope to preemptively find and address product imperfections before any harm comes from them. In reality, and as explained more below, it is only through ongoing experimentation that we find both the nature of failures and the knowledge to know how to correct them. As Petroski observes, “the history of engineering in general, may be told in its failures as well as in its triumphs. Success may be grand, but disappointment can often teach us more.”[23] This is particularly true for complex algorithmic systems, where rapid-fire innovation and incessant iteration are the norm.

Importantly, the problem with precautionary regulation for AI is not just that it might be over-inclusive in seeking to regulate hypothetical problems that never develop. Precautionary regulation can also be under-inclusive by missing problematic behavior or harms that no one anticipated before the fact. Only experience and experimentation reveal certain problems.

In sum, we should not presume that there is a clear preemptive regulatory solution to every problem some people raise about AI, nor should we presume we can even accurately identify all such problems that might come about in the future. Moreover, some risks will never be eliminated entirely, meaning that risk mitigation is the wiser approach. This is why a more flexible bottom-up governance strategy focused on responsiveness and resiliency makes more sense than heavy-handed, top-down strategies that would only avoid risks by making future innovations extremely difficult if not impossible.

The “Proactionary Principle” is the Better Default for AI Policy

The previous section made it clear why the precautionary principle should generally not be used as our policy default if we hope to encourage the development of AI applications and services. What we need is a policy approach that:

- objectively evaluates the concerns raised about AI systems and applications;

- considers whether more flexible governance approaches might be available to address them; and,

- does so without resorting to the precautionary principle as a first-order response.

The proactionary principle is the better general policy default for AI because it satisfies these three objectives.[24] Philosopher Max More defines the proactionary principle as the idea that policymakers should, “[p]rotect the freedom to innovate and progress while thinking and planning intelligently for collateral effects.”[25] There are different names for this same concept, including the innovation principle, which Daniel Castro and Michael McLaughlin of the Information Technology and Innovation Foundation say represents the belief that “the vast majority of new innovations are beneficial and pose little risk, so government should encourage them.”[26] Permissionless innovation is another name for the same idea. Permissionless innovation refers to the idea that experimentation with new technologies and business models should generally be permitted by default.[27]

What binds these concepts together is the belief that innovation should generally be treated as innocent until proven guilty. There will be risks and failures, of course, but the permissionless innovation mindset views them as important learning experiences. These experiences are chances for individuals, organizations, and all of society to make constant improvements through incessant experimentation with new and better ways of doing things.[28] As Virginia Postrel argued in her 1998 book, The Future and Its Enemies, progress demands “a decentralized, evolutionary process” and mindset in which mistakes are not viewed as permanent disasters but instead as “the correctable by-products of experimentation.”[29] “No one wants to learn by mistakes,” Petroski once noted, “but we cannot learn enough from successes to go beyond the state of the art.”[30] Instead we must realize, as other scholars have observed, that “[s]uccess is the culmination of many failures”[31] and understand “failure as the natural consequence of risk and complexity.”[32]

This is why the default for public policy for AI innovation should, whenever possible, be more green lights than red ones to allow for the maximum amount of trial-and-error experimentation, which encourages ongoing learning.[33] “Experimentation matters,” observes Stefan H. Thomke of the Harvard Business School, “because it fuels the discovery and creation of knowledge and thereby leads to the development and improvement of products, processes, systems, and organizations.”[34]

Obviously, risks and mistakes are “the very things regulators inherently want to avoid,”[35] but “if innovators fear they will be punished for every mistake,” Daniel Castro and Alan McQuinn argue, “then they will be much less assertive in trying to develop the next new thing.”[36] And for all the reasons already stated, that would represent the end of progress because it would foreclose the learning process that allows society to discover new, better, and safer ways of doing things. Technology author Kevin Kelly puts it this way:

technologies must be evaluated in action, by action. We test them in labs, we try them out in prototypes, we use them in pilot programs, we adapt our expectations, we monitor their alterations, we redefine their aims as they are modified, we retest them given actual behavior, we re-direct them to new jobs when we are not happy with their outcomes.[37]

In other words, the proactionary principle appreciates the benefits that flow from learning by doing. The goal is to continuously assess and prioritize risks from natural and human-made systems alike, and then formulate and reformulate our toolkit of possible responses to those risks using the most practical and effective solutions available. This should make it clear that the proactionary approach is not synonymous with anarchy. Various laws, government bodies, and especially the courts play an important role in protecting rights, health, and order. But policies need to be formulated such that innovators and innovation are given the benefit of the doubt and risks are analyzed and addressed in a more flexible fashion.

Some of the most effective ways to address potential AI risks already exist in the form of “soft law” and decentralized governance solution. These will be discussed at greater length below. But existing legal remedies include various common law solutions (torts, class actions, contract law, etc), recall authority possessed by many regulatory agencies, and various consumer protection policies. Ex post remedies are generally superior to ex ante prior restraints if we hope to maximize innovation opportunities. Ex ante regulatory defaults are too often set closer to the red light of the precautionary principle and then enforced through volumes of convoluted red tape.

This is what the World Economic Forum has referred to as a “regulate-and-forget” system of governance,[38] or what others call a “build-and-freeze model” or regulation.[39] In such technological governance regimes, older rules are almost never revisited, even after new social, economic, and technical realities render them obsolete or ineffective.[40] A 2017 survey of U.S. Code of Regulations by Deloitte consultants revealed that 68 percent of federal regulations have never been updated and that 17 percent have only been updated once.[41] Public policies for complex and fast-moving technologies like AI cannot be set in stone and forgotten like that if America hopes to remain on the cutting edge of this sector.

Advocates of the proactionary principle look to counter this problem not by eliminating all laws or agencies, but by bringing them in line with flexible governance principles rooted in more decentralized approaches to policy concerns.[42] As many regulatory advocates suggest, it is important to embed or “bake in” various ethical best practices into AI systems to ensure that they benefit humanity. But this, too, is a process of ongoing learning and there are many ways to accomplish such goals without derailing important technological advances. What is often referred to as “value alignment” or “ethically-aligned design” is challenged by the fact that humans regularly disagree profoundly about many moral issues.[43] “Before we can put our values into machines, we have to figure out how to make our values clear and consistent,” says Harvard University psychologist Joshua D. Greene.[44]

The “Three Laws of Robotics” famously formulated decades ago by Isaac Asimov in his science fiction stories continue to be widely discussed today as a guide to embedding ethics into machines.[45] They read:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

What is usually forgotten about these principles, as AI expert Melanie Mitchell reminds us, is the way Asimov, “often focused on the unintended consequences of programming ethical rules into robots,” and how he made it clear that, if applied too literally, “such a set of rules would inevitably fail.”[46]

This is why flexibility and humility are essential virtues when thinking about AI policy. The optimal governance regime for AI can be shaped by responsible innovation practices and embed important ethical principles by design without immediately defaulting to a rigid application of the precautionary principle.[47] In other words, an innovation policy regime rooted in the proactionary principle can also be infused with the same values that animate a precautionary principle-based system.[48] The difference is that the proactionary principle-based approach will look to achieve these goals in a more flexible fashion using a variety of experimental governance approaches and ex post legal enforcement options, while also encouraging still more innovation to solve problems past innovations may have caused.

To reiterate, not every AI risk is foreseeable, and many risks and harms are more amorphous or uncertain. In this sense, the wisest governance approach for AI was recently outlined by the National Institute of Standards and Technology (NIST) in its initial draft AI Risk Management Framework, which is a multistakeholder effort “to describe how the risks from AI-based systems differ from other domains and to encourage and equip many different stakeholders in AI to address those risks purposefully.”[49] NIST notes that the goal of the Framework is:

to be responsive to new risks as they emerge rather than enumerating all known risks in advance. This flexibility is particularly important where impacts are not easily foreseeable, and applications are evolving rapidly. While AI benefits and some AI risks are well-known, the AI community is only beginning to understand and classify incidents and scenarios that result in harm.[50]

This is a sensible framework for how to address AI risks because it makes it clear that it will be difficult to preemptively identify and address all potential AI risks. At the same time, there will be a continuing need to advance AI innovation while addressing AI-related harms. The key to striking that balance will be decentralized governance approaches and soft law techniques described below.

[Note: The subsequent sections of the study will detail how decentralized governance approaches and soft law techniques already are helping to address concerns about AI risks.]

Endnotes:

[1] Adam Thierer, Permissionless Innovation: The Continuing Case for Comprehensive Technological Freedom, 2nd ed. (Arlington, VA: Mercatus Center at George Mason University, 2016): 1-6, 23-38; Adam Thierer, Evasive Entrepreneurs & the Future of Governance (Washington, DC: Cato Institute, 2020): 48-54.

[2] “Wingspread Statement on the Precautionary Principle,” January 1998, https://www.gdrc.org/u-gov/precaution-3.html.

[3] Cass R. Sunstein, Laws of Fear: Beyond the Precautionary Principle (Cambridge, UK: Cambridge University Press, 2005). (“The Precautionary Principle takes many forms. But in all of them, the animating idea is that regulators should take steps to protect against potential harms, even if causal chains are unclear and even if we do not know that those harms will come to fruition.”)

[4] Henk van den Belt, “Debating the Precautionary Principle: ‘Guilty until Proven Innocent’ or ‘Innocent until Proven Guilty’?” Plant Physiology 132 (2003): 1124.

[5] H.W. Lewis, Technological Risk (New York: WW. Norton & Co., 1990): x. (“The history of the human race would be dreary indeed if none of our forebears had ever been willing to accept risk in return for potential achievement.”)

[6] Martin Rees, On the Future: Prospects for Humanity (Princeton, NJ: Princeton University Press, 2018): 136.

[7] Adam Thierer, “Failing Better: What We Learn by Confronting Risk and Uncertainty,” in Sherzod Abdukadirov (ed.), Nudge Theory in Action: Behavioral Design in Policy and Markets (Palgrave Macmillan, 2016): 65-94.

[8] Adam Thierer, “How to Get the Future We Were Promised,” Discourse, January 18, 2022, https://www.discoursemagazine.com/culture-and-society/2022/01/18/how-to-get-the-future-we-were-promised.

[9] J. Storrs Hall, Where Is My Flying Car? (San Francisco: Stripe Press, 2021)

[10] Derek Turner and Lauren Hartzell Nichols, “The Lack of Clarity in the Precautionary Principle,” Environmental Values, Vol 13, No. 4 (2004): 449.

[11] William Rinehart, “Vetocracy, the Costs of Vetos and Inaction,” Center for Growth & Opportunity at Utah State University, March 24, 2022, https://www.thecgo.org/benchmark/vetocracy-the-costs-of-vetos-and-inaction; Adam Thierer, “Red Tape Reform is the Key to Building Again,” The Hill, April 28, 2022, https://thehill.com/opinion/finance/3470334-red-tape-reform-is-the-key-to-building-again.

[12] Philip K. Howard, “Radically Simplify Law,” Cato Institute, Cato Online Forum, http://www.cato.org/publications/cato-online-forum/radically-simplify-law.

[13] Ibid.

[14] Aaron Wildavsky, Searching for Safety (New Brunswick, NJ: Transaction Publishers, 1989): 38.

[15] Thierer, Permissionless Innovation, at 2.

[16] Gabrielle Bauer, “Danger: Caution Ahead,” The New Atlantis, February 4, 2022, https://www.thenewatlantis.com/publications/danger-caution-ahead.

[17] Richard B. Belzer, “Risk Assessment, Safety Assessment, and the Estimation of Regulatory Benefits” (Mercatus Working Paper, Mercatus Center at George Mason University, Arlington, VA, 2012), 5, http://mercatus.org/publication/risk-assessment-safety-assessment-and-estimation-regulatory-benefits; John D. Graham and Jonathan Baert Wiener, eds. Risk vs. Risk: Tradeoffs in Protecting Health and the Environment, (Cambridge, MA: Harvard University Press, 1995).

[18] Thierer, Permissionless Innovation, at 33-8.

[19] Adam Satariano, Nick Cumming-Bruce and Rick Gladstone, “Killer Robots Aren’t Science Fiction. A Push to Ban Them Is Growing,” New York Times, December 17, 2021, https://www.nytimes.com/2021/12/17/world/robot-drone-ban.html.

[20] Adam Thierer, “Soft Law: The Reconciliation of Permissionless & Responsible Innovation,” in Adam Thierer, Evasive Entrepreneurs & the Future of Governance (Washington, DC: Cato Institute, 2020): 183-240, https://www.mercatus.org/publications/technology-and-innovation/soft-law-reconciliation-permissionless-responsible-innovation.

[21] Henry Petroski, The Evolution of Useful Things (New York: Vintage Books, 1994): 34.

[22] Ibid., 27,

[23] Henry Petroski, To Engineer is Human: The Role of Failure in Successful Design (New York: Vintage, 1992): 9.

[24] James Lawson, These Are the Droids You’re Looking For: An Optimistic Vision for Artificial Intelligence, Automation and the Future of Work (London: Adam Smith Institute, 2020): 86, https://www.adamsmith.org/research/these-are-the-droids-youre-looking-for.

[25] Max More, “The Proactionary Principle (March 2008),” Max More’s Strategic Philosophy, March 28, 2008, http://strategicphilosophy.blogspot.com/2008/03/proactionary-principle-march-2008.html.

[26] Daniel Castro & Michael McLaughlin, “Ten Ways the Precautionary Principle Undermines Progress in Artificial Intelligence,” Information Technology and Innovation Foundation, February 4, 2019, https://itif.org/publications/2019/02/04/ten-ways-precautionary-principle-undermines-progress-artificial-intelligence.

[27] Thierer, Permissionless Innovation.

[28] Thierer, “Failing Better.”

[29] Virginia Postrel, The Future and Its Enemies (New York: The Free Press, 1998): xiv.

[30] Henry Petroski, To Engineer is Human: The Role of Failure in Successful Design (New York: Vintage, 1992): 62.

[31] Kevin Ashton, How to Fly a Horse: The Secret History of Creation, Invention, and Discovery (New York: Doubleday, 2015): 67.

[32] Megan McArdle, The Up Side of Down: Why Failing Well is the Key to Success (New York: Viking, 2014), 214.

[33] F. A. Hayek, The Constitution of Liberty (London: Routledge, 1960, 1990): 81. (“Humiliating to human pride as it may be, we must recognize that the advance and even preservation of civilization are dependent upon a maximum of opportunity for accidents to happen.”)

[34] Stefan H. Thomke, Experimentation Matters: Unlocking the Potential of New Technologies for Innovation (Harvard Business Review Press, 2003), 1.

[35] Daniel Castro and Alan McQuinn, “How and When Regulators Should Intervene,” Information Technology and Innovation Foundation Reports, (February 2015): 2 http://www.itif.org/publications/how-and-when-regulators-should-intervene.

[36] Ibid.

[37] Kevin Kelly, “The Pro-Actionary Principle,” The Technium, November 11, 2008, https://kk.org/thetechnium/the-pro-actiona.

[38] World Economic Forum, Agile Regulation for the Fourth Industrial Revolution (Geneva: Switzerland: 2020): 4, https://www.weforum.org/projects/agile-regulation-for-the-fourth-industrial-revolution.

[39] Jordan Reimschisel and Adam Thierer, “’Build & Freeze’ Regulation Versus Iterative Innovation,” Plain Text, November 1, 2017, https://readplaintext.com/build-freeze-regulation-versus-iterative-innovation-8d5a8802e5da.

[40] Adam Thierer, “Spring Cleaning for the Regulatory State,” AIER, May 23, 2019, https://www.aier.org/article/spring-cleaning-for-the-regulatory-state.

[41] Daniel Byler, Beth Flores & Jason Lewris, “Using Advanced Analytics to Drive Regulatory Reform: Understanding Presidential Orders on Regulation Reform,” Deloitte, 2017, https://www2.deloitte.com/us/en/pages/public-sector/articles/advanced-analytics-federal-regulatory-reform.html.

[42] Adam Thierer, Governing Emerging Technology in an Age of Policy Fragmentation and Disequilibrium, American Enterprise Institute (April 2022), https://platforms.aei.org/can-the-knowledge-gap-between-regulators-and-innovators-be-narrowed.

[43] Brian Christian, The Alignment Problem: Machine Learning and Human Values (New York: W.W. Norton & Company, 2020).

[44] Joshua D. Greene, “Our Driverless Dilemma,” Science (June 2016): 1515.

[45] Susan Leigh Anderson, “Asimov’s ‘Three Laws of Robotics’ and Machine Metaethics,” AI and Society, Vol. 22, No. 4, (2008): 477-493.

[46] Melanie Mitchell, Artificial Intelligence: A Guide for Thinking Humans (New York: Farrar, Straus and Giroux, 2019): 126 [Kindle edition.]

[47] Thomas A. Hemphill, “The Innovation Governance Dilemma: Alternatives to the Precautionary Principle,” Technology in Society, Vol. 63 (2020): 6, https://ideas.repec.org/a/eee/teinso/v63y2020ics0160791x2030751x.html.

[48] Adam Thierer, “Are ‘Permissionless Innovation’ and ‘Responsible Innovation’ Compatible?” Technology Liberation Front, July 12, 2017, https://techliberation.com/2017/07/12/are-permissionless-innovation-and-responsible-innovation-compatible.

[49] The National Institute of Standards and Technology, “AI Risk Management Framework: Initial Draft,” (March 17, 2022): 1, https://www.nist.gov/itl/ai-risk-management-framework.

[50] Ibid., at 5.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.